Do you know how often your AI-powered app blunders?

The problem I was having is that I wasn’t sure how reliable my app was at filling out a form from a chat based interaction with a user.

I needed a way to mock out conversations with a pretend users so that I could get some kind of benchmark for how often the app fails to do what it is supposed to do.

So I built a hidden part of the app where I use AI to pretend to be a user and run a mock conversation.

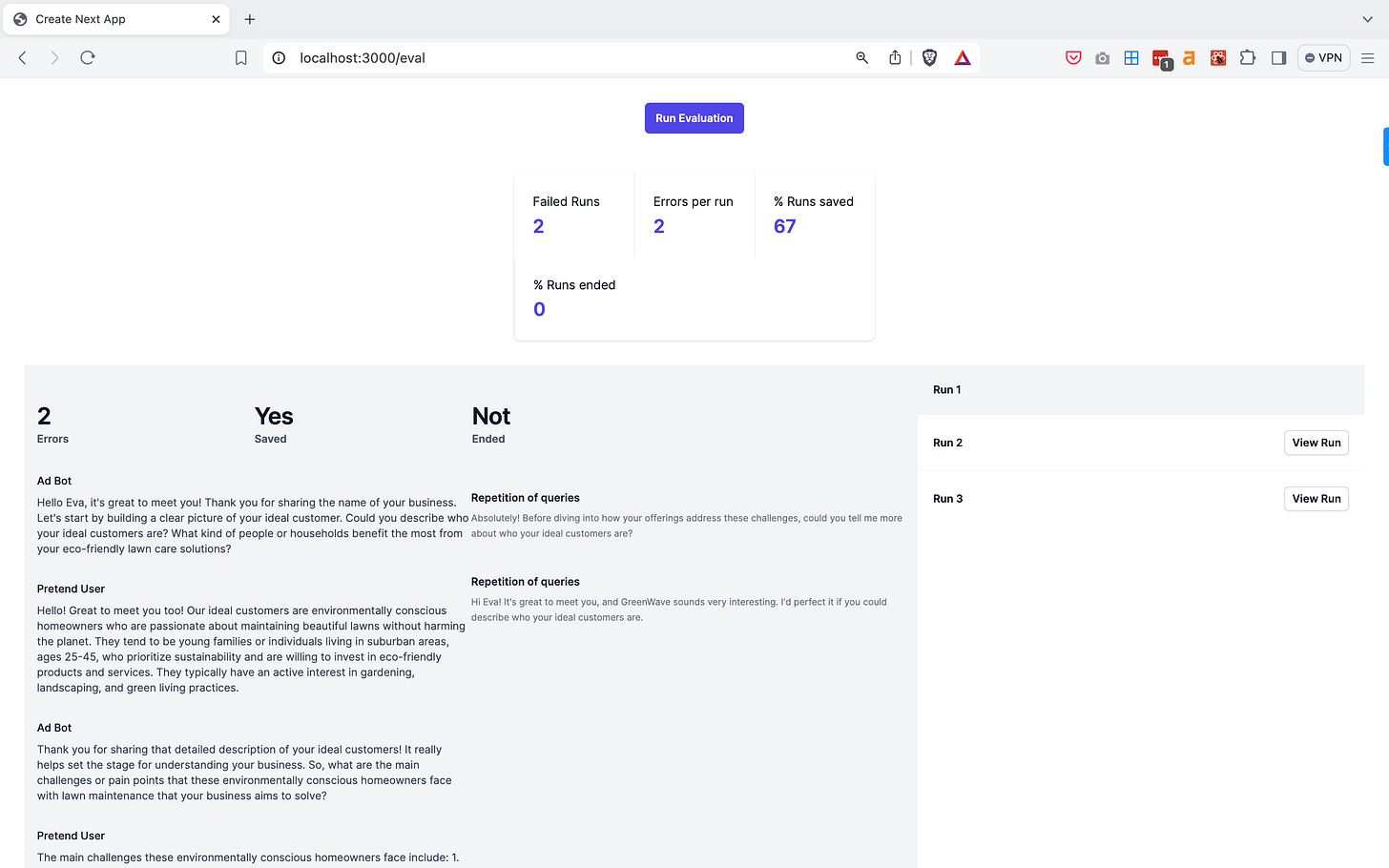

The numbers at the top of the screen tell me that out of 5 conversations with a pretend user, 2 of the conversation failed catastrophically and errored out.

Of the remaining 3 conversations, each conversation had on average 2 errors each.

67% of the conversations saved data to the form as intended, but 0% of the conversations concluded within 10 exchanges (which is the arbitrary threshold I set).

Within each run (listed out on the right hand column), I can see the details for the run in the the large grey area on the right. To the left is the actual conversation between my app and the pretend user. At the top of the grey area are stats for that specific run (2 errors in the conversation, whether the data was save don this run and whether the conversation concluded). To the right of the grey area is a list of each error identified in the conversation (in this case both errors were related to my app asking for information it had already asked for previously).

0% of the conversations concluded as they were supposed.

That’s not a great start.

At least now I have a bench mark to work with. Tomorrow I’ll have to work on improving my prompts so that I can get these numbers up.

I’m going to for a 95% pass rate. That means I’m only comfortable releasing the application once I can get the error rate down to 1 in 20 conversations.